Restricted Adversarial Attacks

By LI Haoyang 2020.11.30

Content

Restricted Adversarial AttacksContentRobust AttackSynthesizing Robust Adversarial Examples - ICML 2018Expectation Over TransformationOptimization ObjectiveEvaluationSpatial RestrictedOne pixel attack for fooling deep neural networks - CVPR 2017Spectral RestrictedLow Frequency Adversarial Perturbation - UAI 2019Low frequency subspaceDiscrete cosine transform (DCT)Sampling low frequency noiseLow frequency noise success rateLow frequency gradient descentApplication to black-box attacksBoundary attackNES attackExperimentsInspirationsOn the Effectiveness of Low Frequency Perturbations - IJCAI 2019Frequency ConstraintsExperimentsInspirations

Robust Attack

Synthesizing Robust Adversarial Examples - ICML 2018

Anish Athalye, Logan Engstrom, Andrew Ilyas, Kevin Kwok. Synthesizing Robust Adversarial Examples. ICML 2018. arXiv:1707.07397

This is the source of Expectation OverTransformation, the base for many applicable adversarial attacks.

Prior work has shown that adversarial examples generated using these standard techniques often lose their adversarial nature once subjected to minor transformations (Luo et al., 2016; Lu et al., 2017).

Expectation Over Transformation

In a white-box setting, the attackers know a set of possible classes and a space of valid inputs of the classifier and have access to the function and its gradient for any class and input .

In the standard case, with a target class and an attack budget -radius ball, the adversarial examples are generated by maximizing the log-likelihood, i.e.

However, prior work has shown that these adversarial examples fail to remain adversarial under image transformations that occur in the real world, such as angle and viewpoint changes (Luo et al., 2016; Lu et al., 2017).

To deal with this problem, they incorporate potential transformations into the generating process and propose Expectation Over Transformation (EOT).

With a chosen distribution of transformation functions , an input controlled by the adversary, the "true" input perceived by the classifier, and a distance function , EOT aims to constrain the expected effective distance between the adversarial and original inputs, i.e.

and the corresponding optimization becomes:

In practice, the distribution can model perceptual distortions such as random rotation, translation, or addition of noise.

A direct and simple idea.

Within the framework, however, there is a great deal of freedom in the actual method by which examples are generated, including choice of , distance metric, and optimization method.

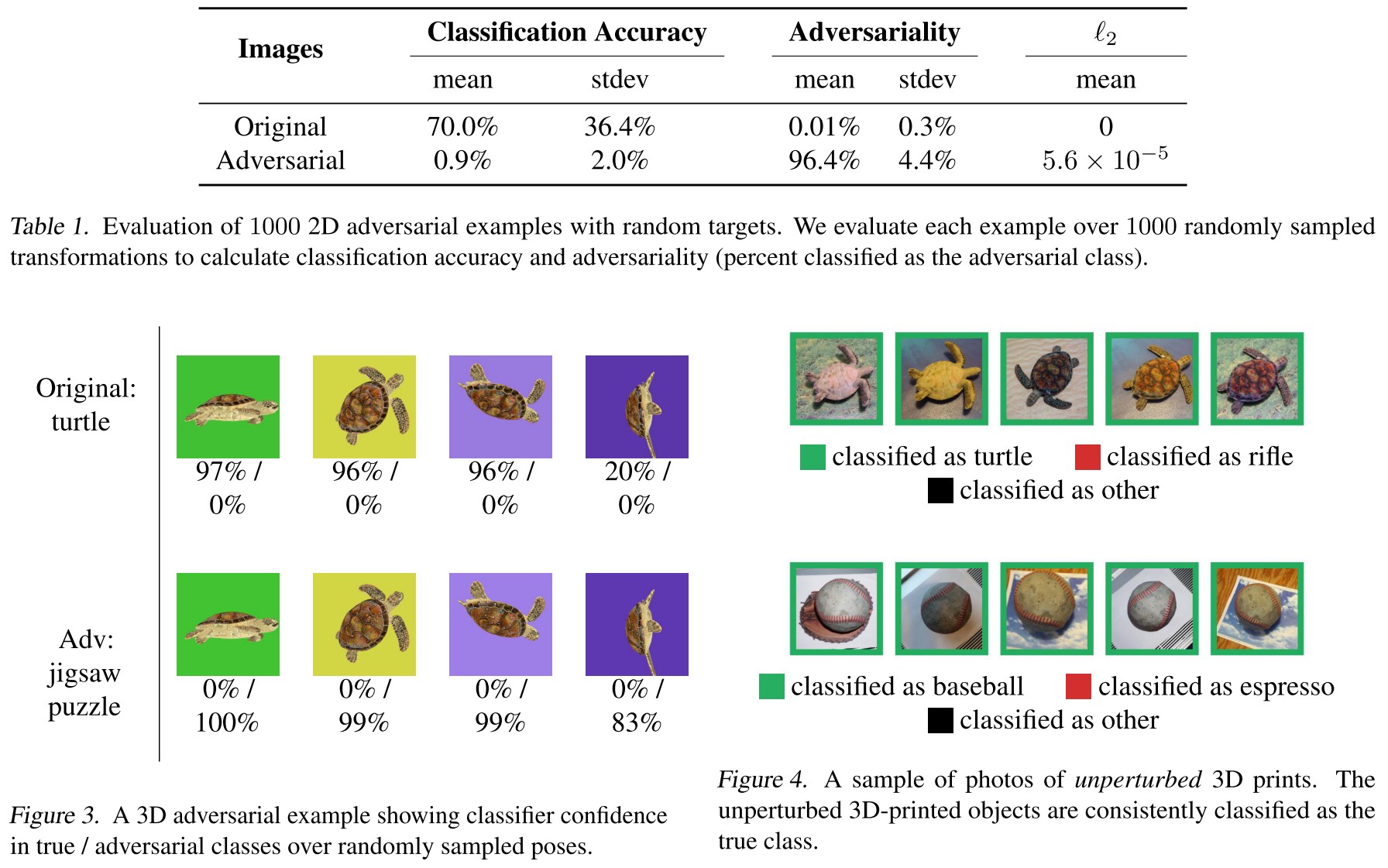

2D case

They adopt a set of random transformations of the form .

3D case

To synthesize 3D adversarial examples, we consider textures (color patterns) corresponding to some chosen 3D object (shape), and we choose a distribution of transformation functions that take a texture and render a pose of the 3D object with the texture applied.

Given a particular choice of parameters, the rendering of a texture can be written as for some coordinate map and background .

Optimization Objective

They take the form proposed by Carlini & Wagner, i.e.

and to encourage visual imperceptibility, they set to be the norm in the LAB color space where Euclidean distance roughly corresponds with perceptual distance.

The final objective then becomes:

We use projected gradient descent to maximize the objective, and clip to the set of valid inputs (e.g. [0, 1] for images).

Evaluation

The adversariality is measured by

where is a target class different from original classes.

Many results are omitted.

Spatial Restricted

One pixel attack for fooling deep neural networks - CVPR 2017

Jiawei Su, Danilo Vasconcellos Vargas, Sakurai Kouichi. One pixel attack for fooling deep neural networks. CVPR 2017. arXiv:1710.08864

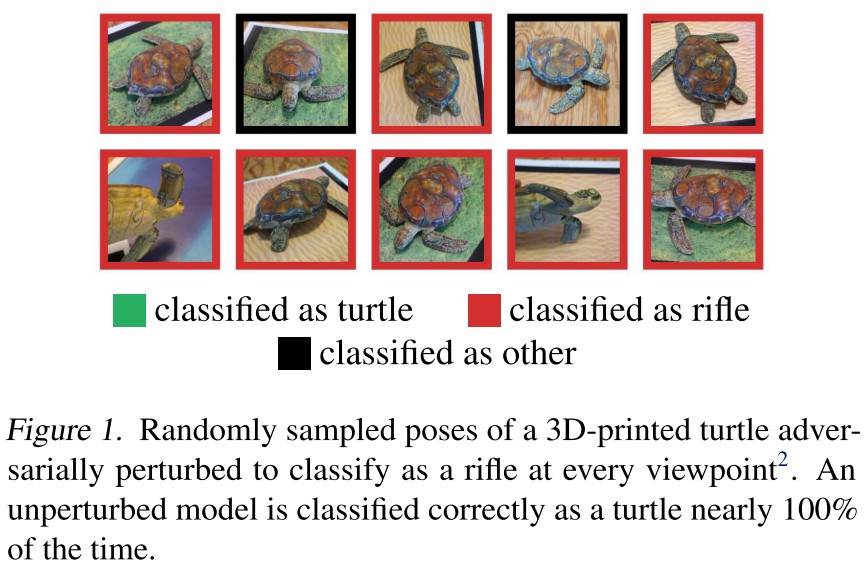

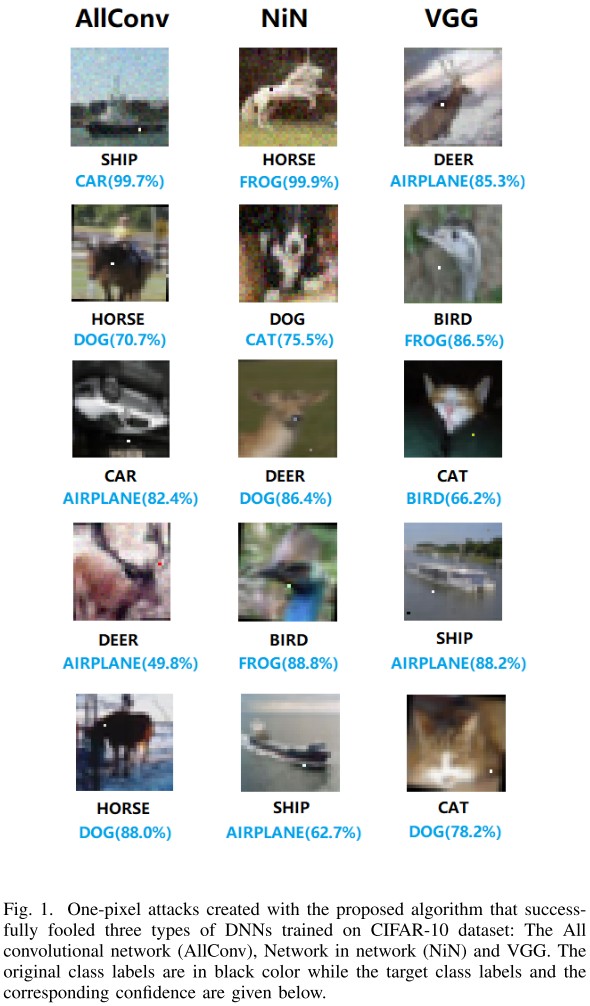

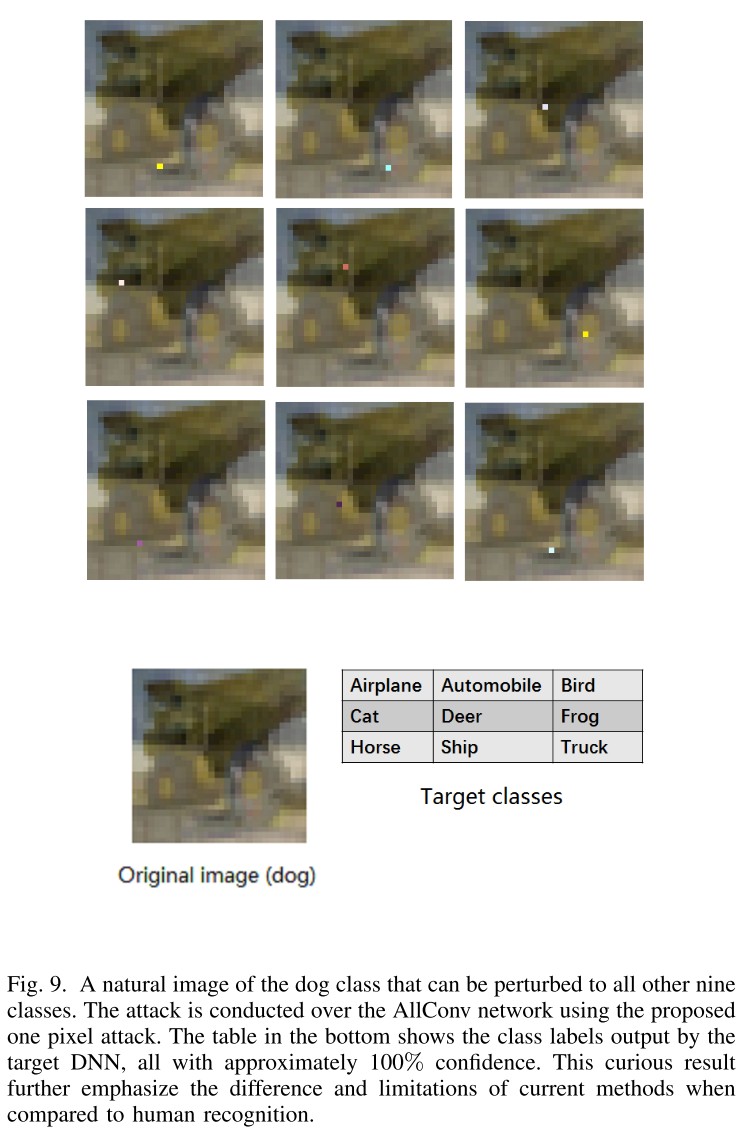

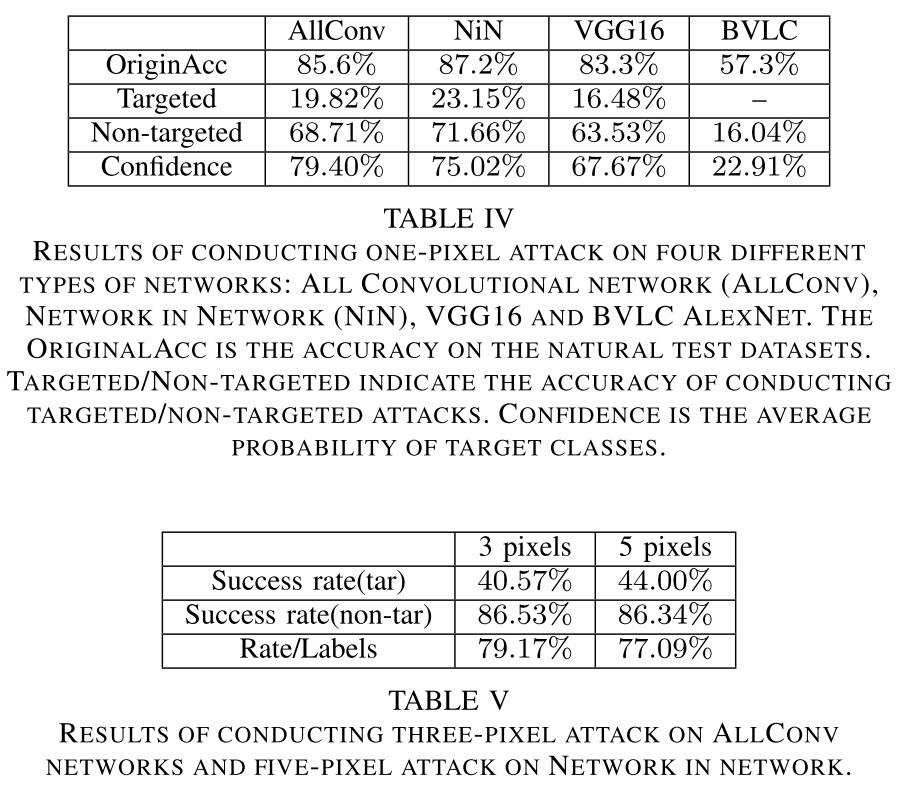

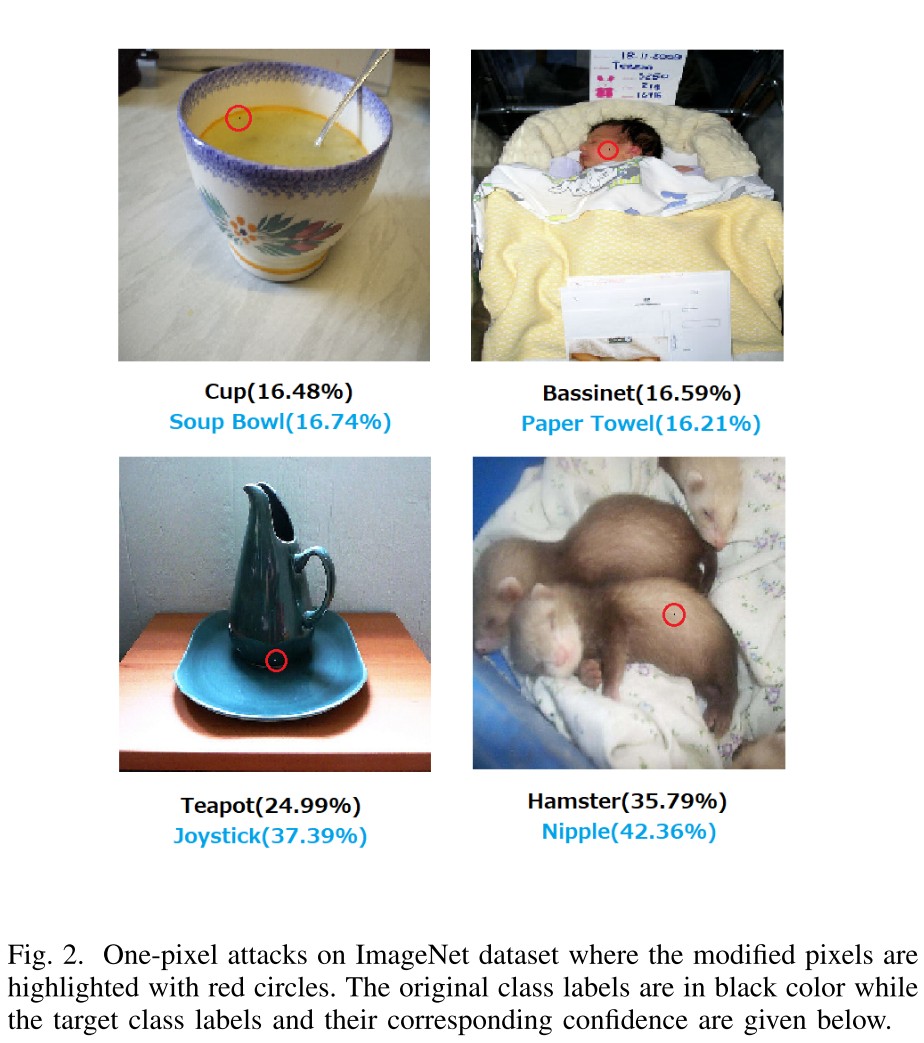

In this paper, by perturbing only one pixel with differential evolution, we propose a black-box DNN attack in a scenario where the only information available is the probability labels (Figure 1 and 2).

They managed to use Differential Evolution to craft one-pixel attack, which is surprising but not very inspiring, therefore it's better to just check the results.

Spectral Restricted

Low Frequency Adversarial Perturbation - UAI 2019

Chuan Guo, Jared S. Frank, Kilian Q. Weinberger. Low Frequency Adversarial Perturbation. UAI 2019. arXiv:1809.08758

In the black-box setting, the absence of gradient information often renders this search problem costly in terms of query complexity.

In this paper we propose to restrict the search for adversarial images to a low frequency domain.

They propose to search adversarial examples in the black-box settings in low frequency domain, and prove that it accelerate the searching by 2 to 4 times.

Low frequency subspace

The inherent query inefficiency of gradient estimation and decision-based attacks stems from the need to search over or randomly sample from the high-dimensional image space.

Thus the query complexity depends on the relative adversarial subspace dimensionality compared to the full image space, and finding a low-dimensional subspace that contains a high density of adversarial examples can improve these methods.

Most of the content-defining information in natural images live in the low end of the frequency spectrum as utilized by JPEG.

It is therefore plausible to assume that CNNs are trained to respond especially to low-frequency patterns in order to extract class-specific signatures from images.

Motivated by it, they propose to restrict the search space to the low-frequency spectrum.

Discrete cosine transform (DCT)

Given a 2D image , define basis functions

The DCT transform is defined as

where are normalization terms to ensure that the transformation is isometric, i.e. .

The entry corresponds to the magnitude of wave , with lower frequencies represented by lower .

The inverse DCT, i.e. is

Both DCT and IDCT are applied channel-wise independently for images with multiple channels.

Sampling low frequency noise

Given any distribution over , one can sample a random matrix in frequency space so that

where is a factor parameter controlling the low frequency size.

The corresponding noise "image" in pixel space is then defined by

By definition, has non-zero cosine wave coefficients only in frequencies lower than .

This distribution of low frequency noise is denoted as .

Low frequency noise success rate

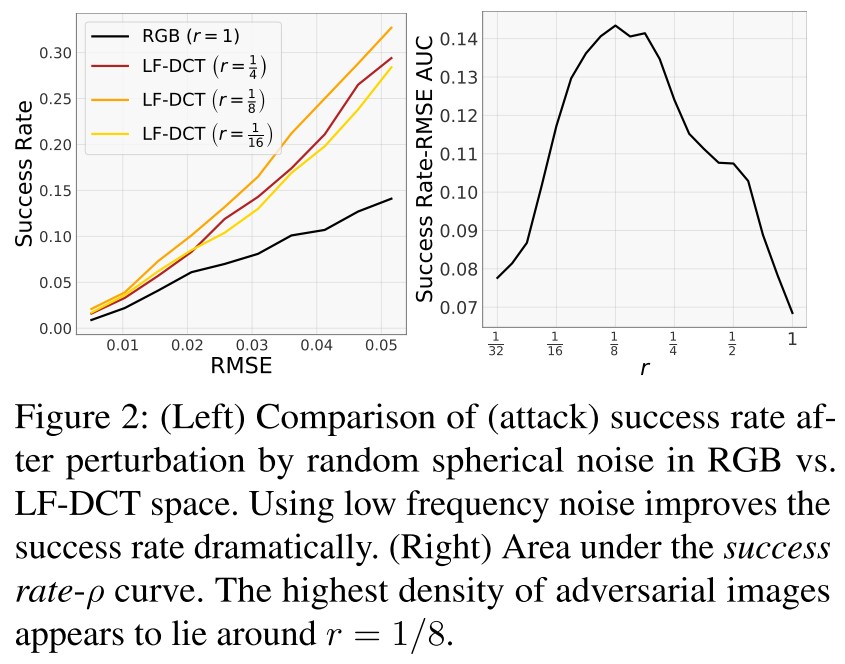

They compute the success rate in RGB and LF-DCT space of random noises on ResNet-50 trained for ImageNet.

We sample the noise vector η uniformly from the surface of the unit sphere of radius ρ > 0 in the rd × rd LF-DCT space and project it back to RGB through the IDCT transform.

Here they conclude:

- As expected, the success rate increases with the magnitude of perturbation across all values of .

- There appears to be a sweet spot around , which corresponds to a reduction of dimensionality by .

- The worst success rate is achieved with , which corresponds to no dimensionality reduction (and is identical to sampling in the original RGB space)

This is further verified in Hold me Tight !, where the adversarially trained models also seem to be more sensitive to low frequency changes.

Low frequency gradient descent

Let denote the adversarial loss, e.g. the C&W loss

For a given and , define by

The low-frequency perturbation domain is then parametrized as

Denote the vectorizations of and as and , i.e.

It's the commonly used np.reshape(-1).

Each coordinate of is a linear function of .

For any vector , its right-product with the Jacobian of IDCT is given by

Thus it's possible to apply the chain rule to compute

The first equivalent is obtained by .

which is equivalent to applying DCT to the gradient and dropping the high frequency coefficients.

It makes the implementation relatively simple, and it seems to indicate that high frequency gradient is also some noise for adversarial attack.....

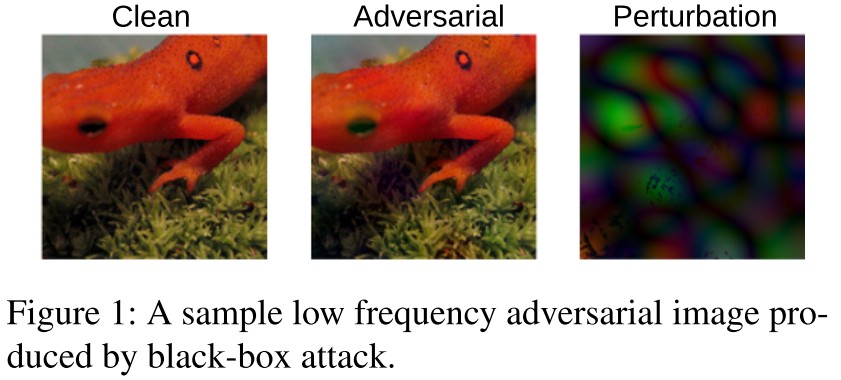

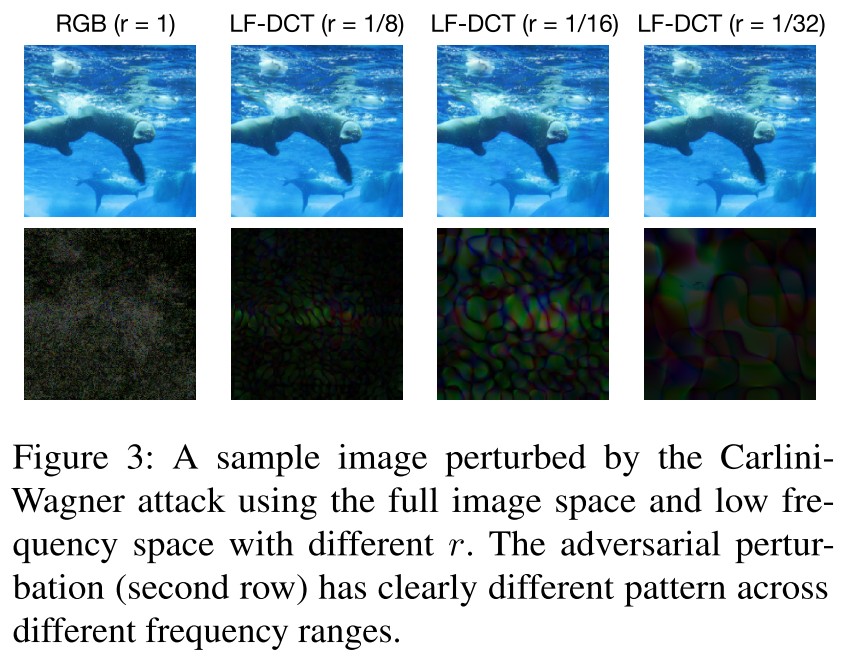

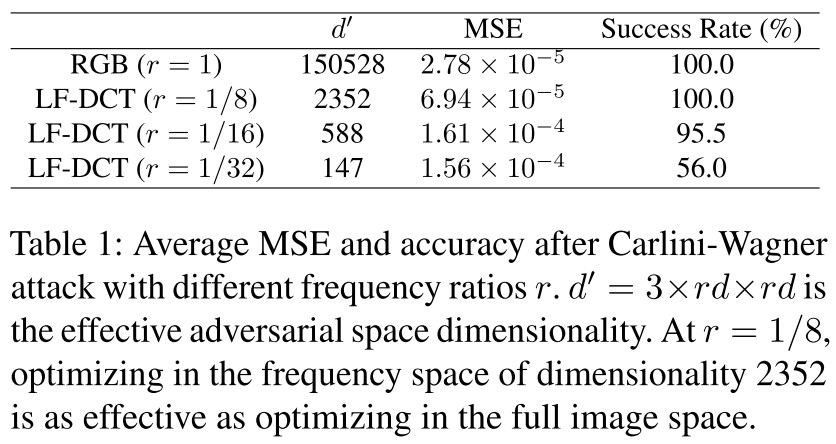

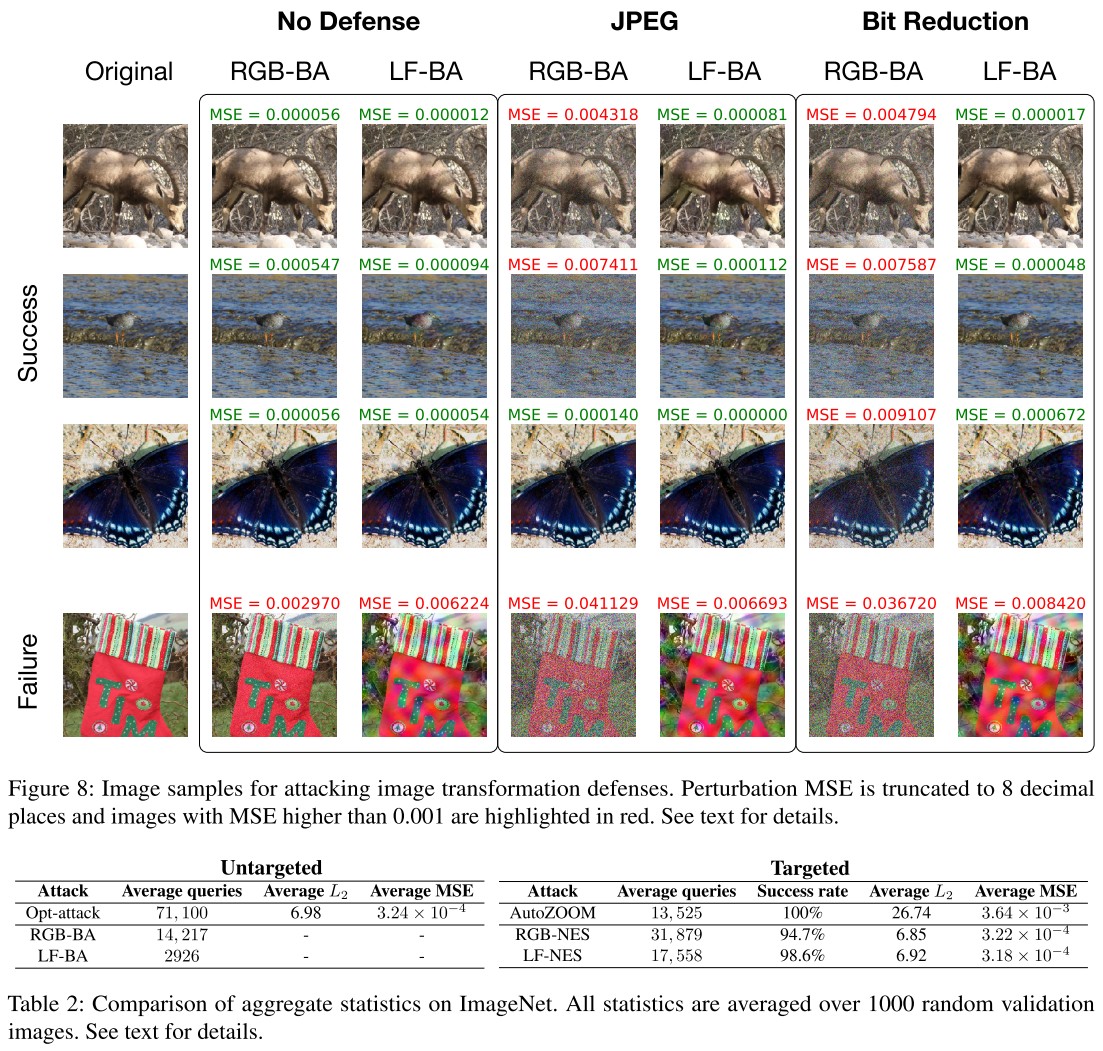

As shown in Figure 3 and Table 1, the low-frequency attack increases the MSE by 3 times, but still imperceptible to human eyes, and the generated adversarial pattern is relatively smooth.

Sharma, Ding, and Brubaker [2019] showed that low frequency gradient-based attacks enjoy greater efficiency and can transfer significantly better to defended models.

Furthermore, they observe that the benefit of low frequency perturbation is not merely due to dimensionality reduction — perturbing exclusively the high frequency components does not give the same benefit.

Application to black-box attacks

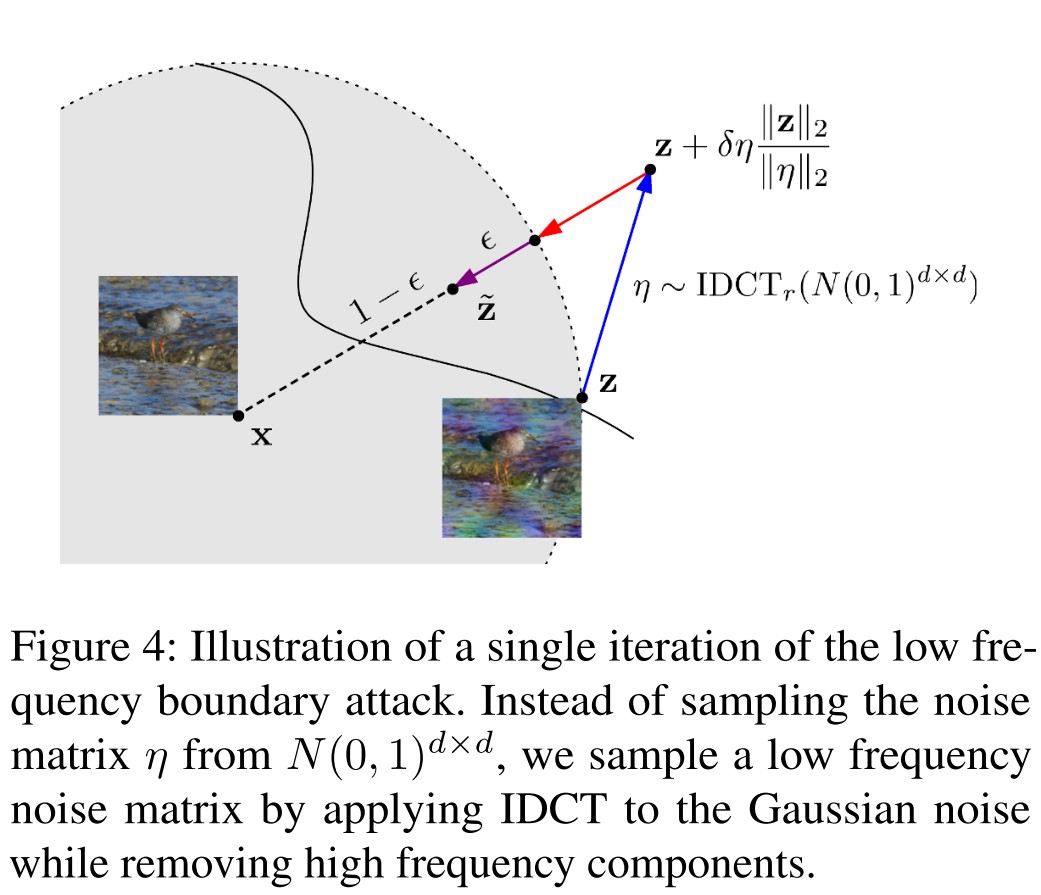

Boundary attack

Proposed in

Brendel, W.; Rauber, J.; and Bethge, M. 2017. Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. CoRR abs/1712.04248

This attack works as follows:

- Sample a noise matrix .

- Add it to the current example after appropriate scaling.

- Project the perturbed point onto the sphere of center .

- Contract towards and ensures it remains adversarial, as the new example .

- Iterate from 1 to 4.

It works like a gradient-free reverse projected gradient descent attack.

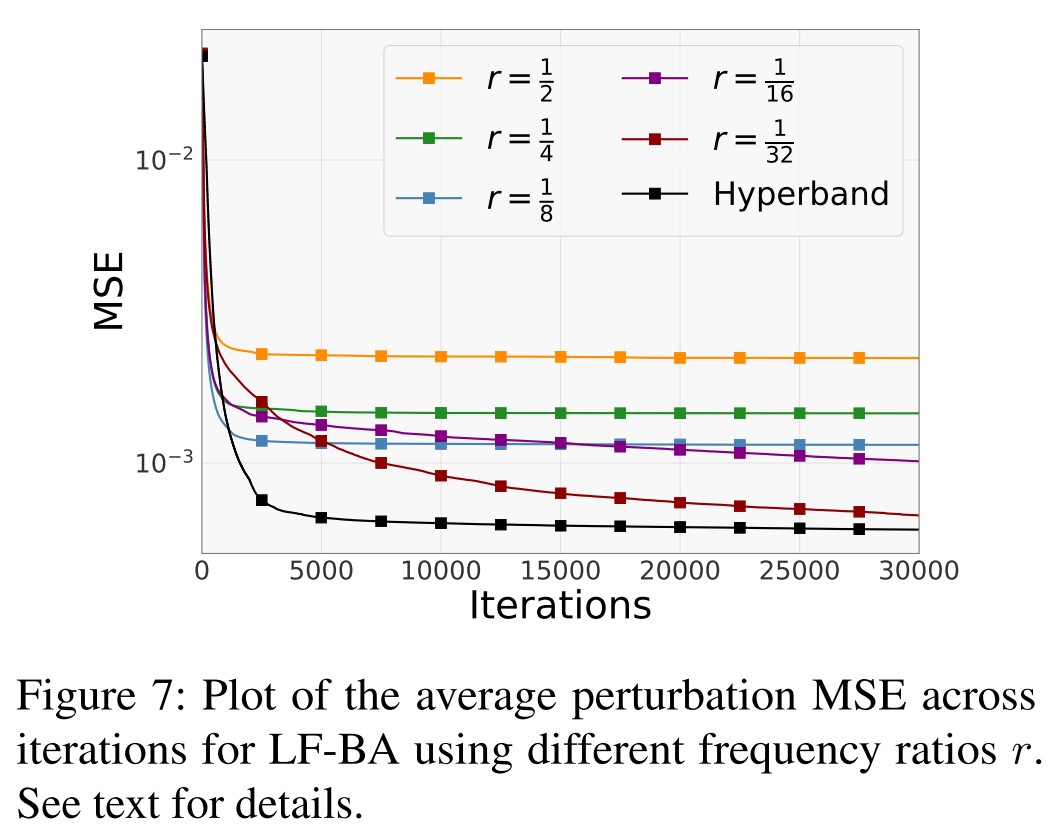

Their modified version, named as low frequency boundary attack (LF-BA), constrains the noise matrix to be sampled from .

NES attack

Natural evolution strategies (NES) is a black-box optimization proposed in

Wierstra, D.; Schaul, T.; Glasmachers, T.; Sun, Y.; Peters, J.; and Schmidhuber, J. 2014. Natural evolution strategies. Journal ofMachine Learning Research 15(1):949–980

and it's used for black-box adversarial attack in

Ilyas, A.; Engstrom, L.; Athalye, A.; and Lin, J. 2018. Black-box adversarial attacks with limited queries and information. In Proceedings ofthe 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, 2142–2151

The NES attack minimizes the loss at all points near . Specify a search distribution and minimize:

where:

- is some perceptibility threshold

- is chosen to be an isotropic Gaussian, i.e.

The gradient is then

Thus the problem can be minimized with stochasitic gradient descent by sampling a batch of noise vectors and computing the stochastic gradient

One way to interpret this update rule is that the procedure pushes away from regions of low adversarial density.

Their modified version, named as low frequency NES (LF-NES), constrains the noises to be sampled from a low-frequency subspace.

Experiments

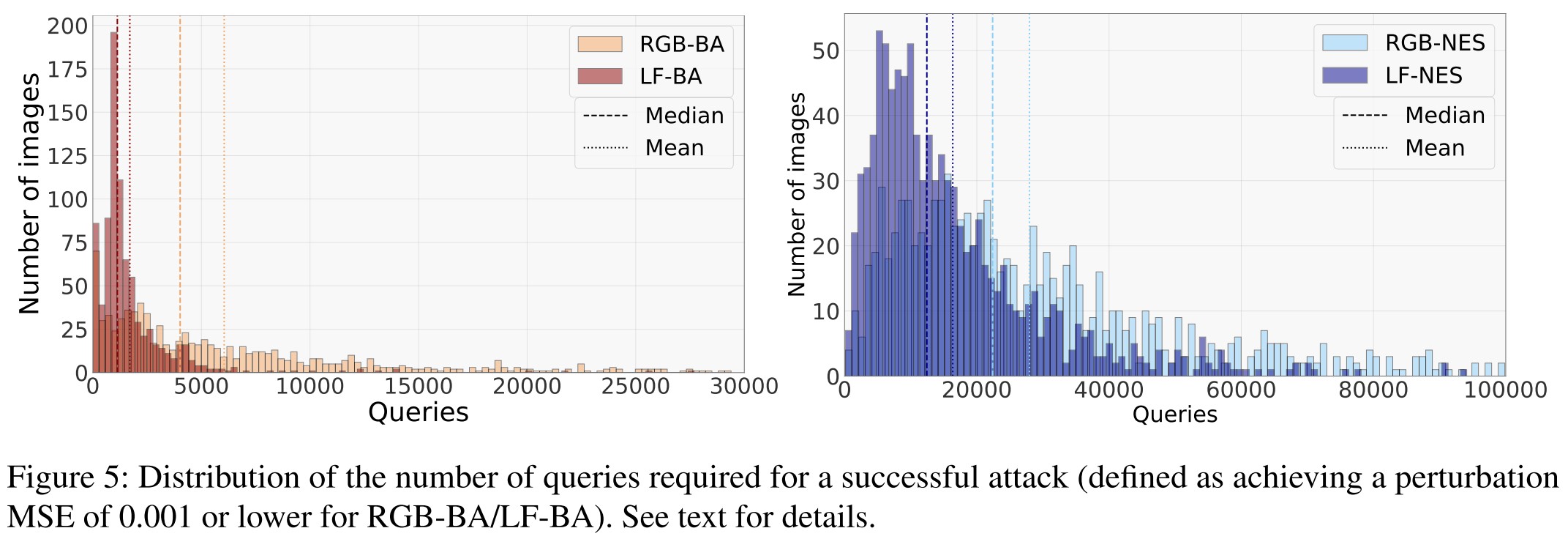

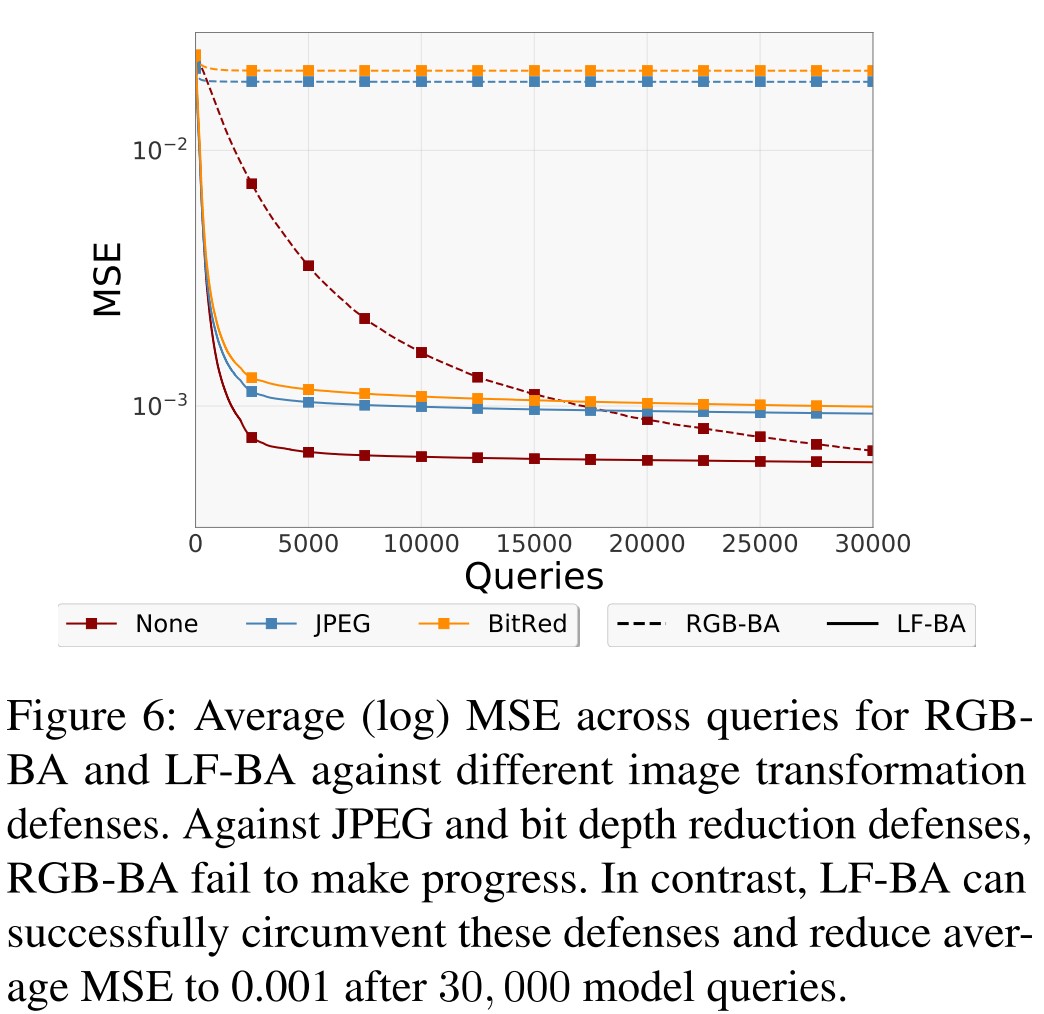

As shown in Figure 5,

The histograms of LF-BA (dark red) and LF-NES (dark blue) are shifted left compared to their Gaussian-based counterparts.

Using low-frequency perturbations reduces the queries required by either of these two black-box attacks.

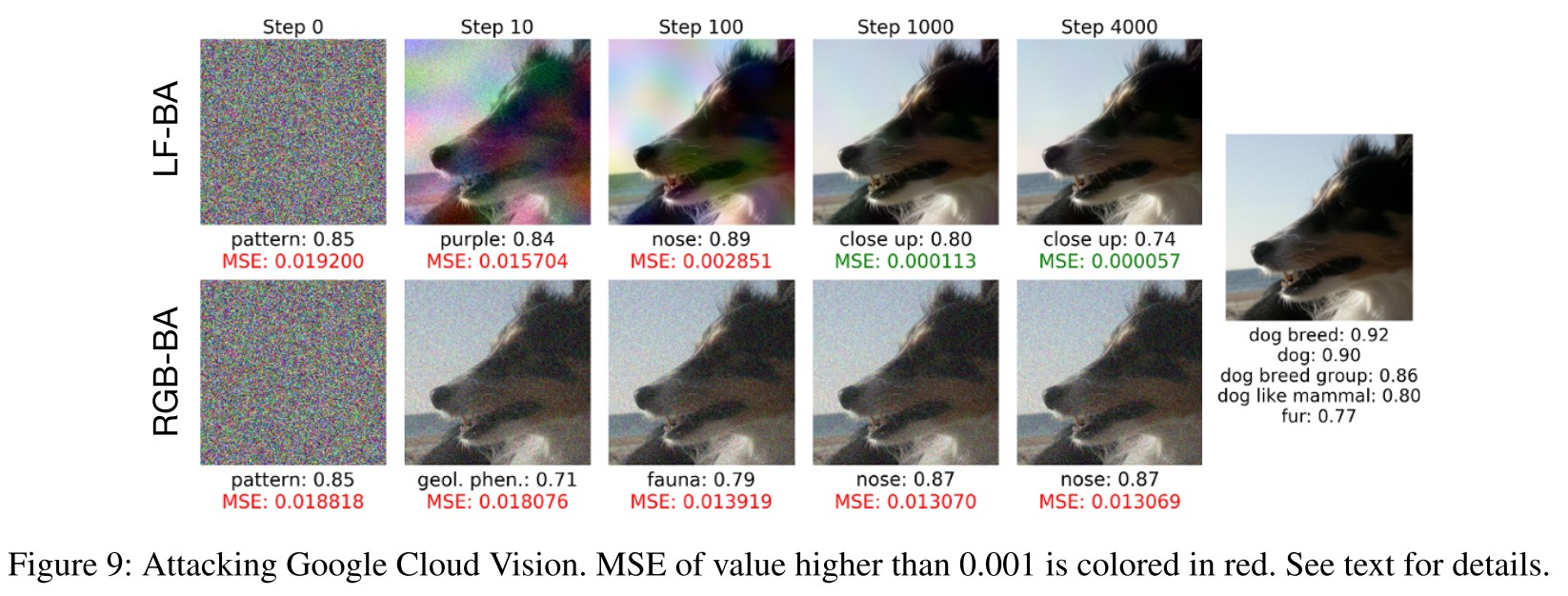

And it also reveals to be able to breach transformation based defenses.

and the google vision cloud

Inspirations

Although this paper packs up many experiments to demonstrate the efficiency of searching adversarial examples in low-frequency domain, I think its value lies more in developing a plausible low frequency gradient descent with a very simple implementation.

I think it's possible to use this for adversarial training, and there may be surprises.

On the Effectiveness of Low Frequency Perturbations - IJCAI 2019

Yash Sharma, Gavin Weiguang Ding, Marcus Brubaker. On the Effectiveness of Low Frequency Perturbations. IJCAI 2019. arXiv:1903.00073

Recent work demonstrates that restricting the search space in low frequency components improves adversarial attack, here they empirically study the effect of low frequency perturbations, and find that:

- The benefit is not brought by the dimensional reduction.

- The adversarially trained models are similarly vulnerable to low frequency perturbations as those undefended models.

- Under , the low frequency perturbations are perceptible.

The questions they pose are as follows:

- Is the effectiveness of low frequency perturbations simply due to the reduced search space or specifically due to the use of low frequency components?

- Under what conditions are low frequency perturbations more effective than unconstrained perturbations?

Testing against state-of-the-art ImageNet [Deng et al., 2009] defense methods, we show that, when perturbations are constrained to the low frequency subspace, they are 1) generated faster; and are 2) more transferable.

Frequency Constraints

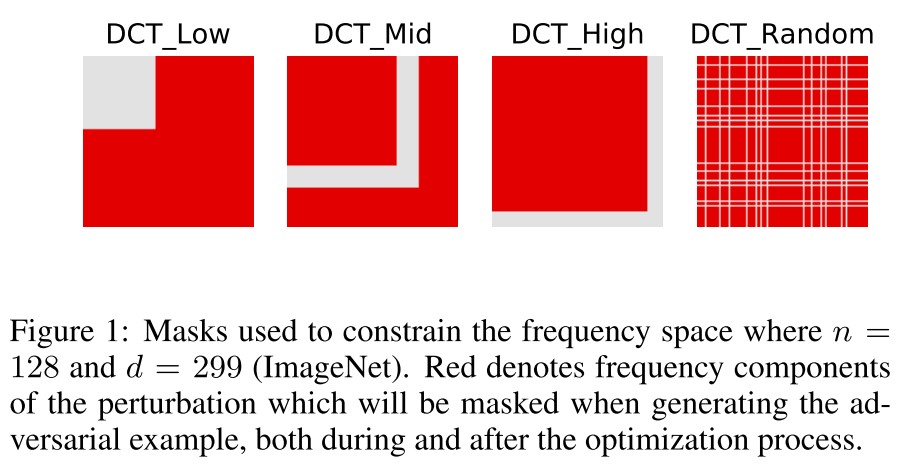

They remove certain frequency components of the perturbation by applying a mask to its DCT transfrom , and then reconstruct the perturbation by applying on the masked DCT transform, i.e.

The following gradient is then used to conduct attacks:

They choose four masks as shown in Figure 1 to study this problem.

Experiments

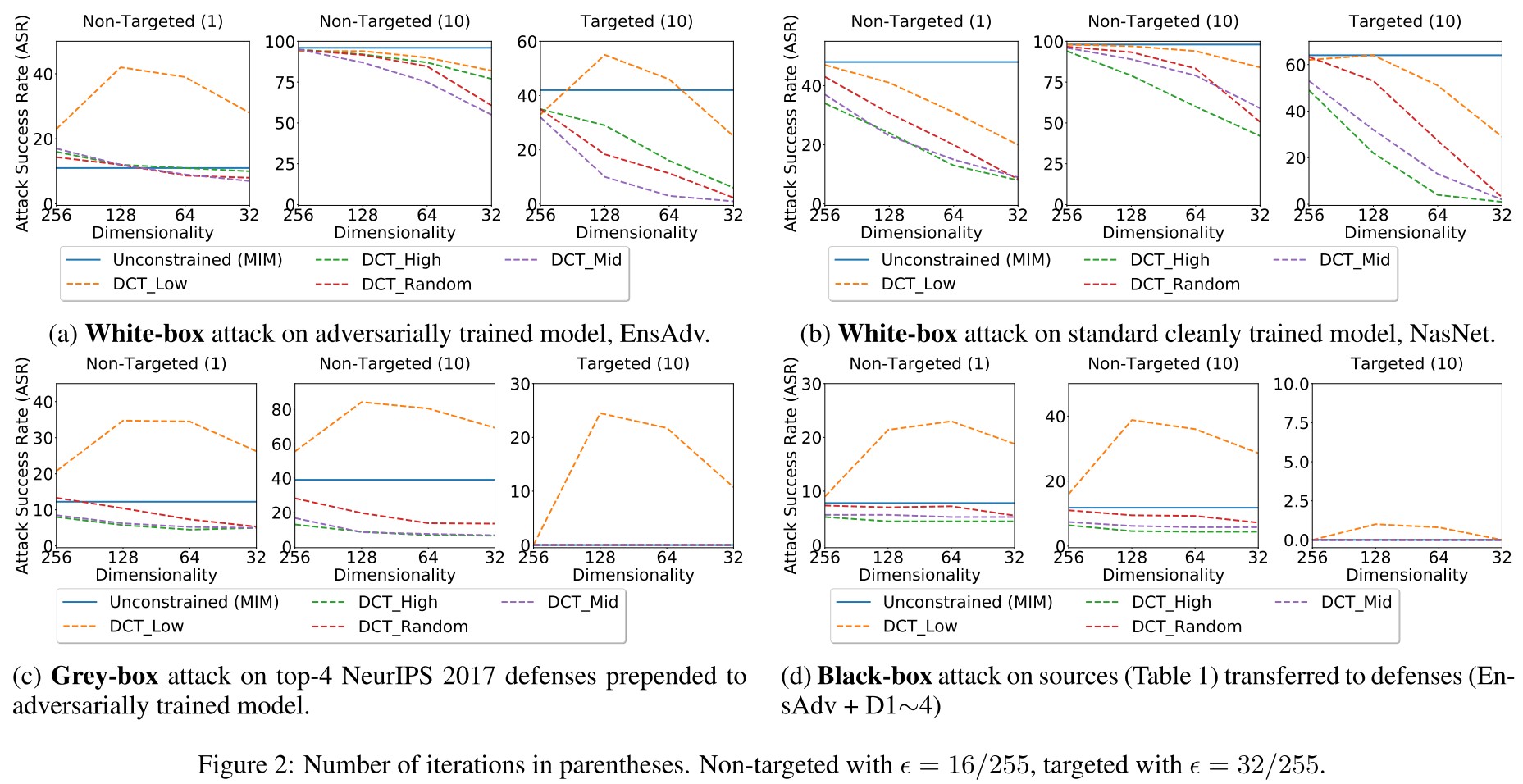

In each figure, the plots are, from left to right, non-targeted attack with iterations = 1, non-targeted with iterations = 10, and targeted with iterations = 10.

As shown in Figure 2, the effectiveness of low frequency perturbations are not brought by the reduced search space, but by the low frequency regime it uses.

Their other conclusions are as follows:

- DCT Low generates effective perturbations faster on adversarially trained models, but not on cleanly trained models.

- DCT Low bypasses defenses prepended to the adversarially trained model.

- DCT Low helps black-box transfer to defended models.

- DCT Low is not effective when transferring between undefended cleanly trained models.

It has been demonstrated later than this paper that adversarial training biases the robustness improvement to high frequency components, making the first three findings explainable. The fourth seems to indicate that standardly trained models have a favor to high frequency components.

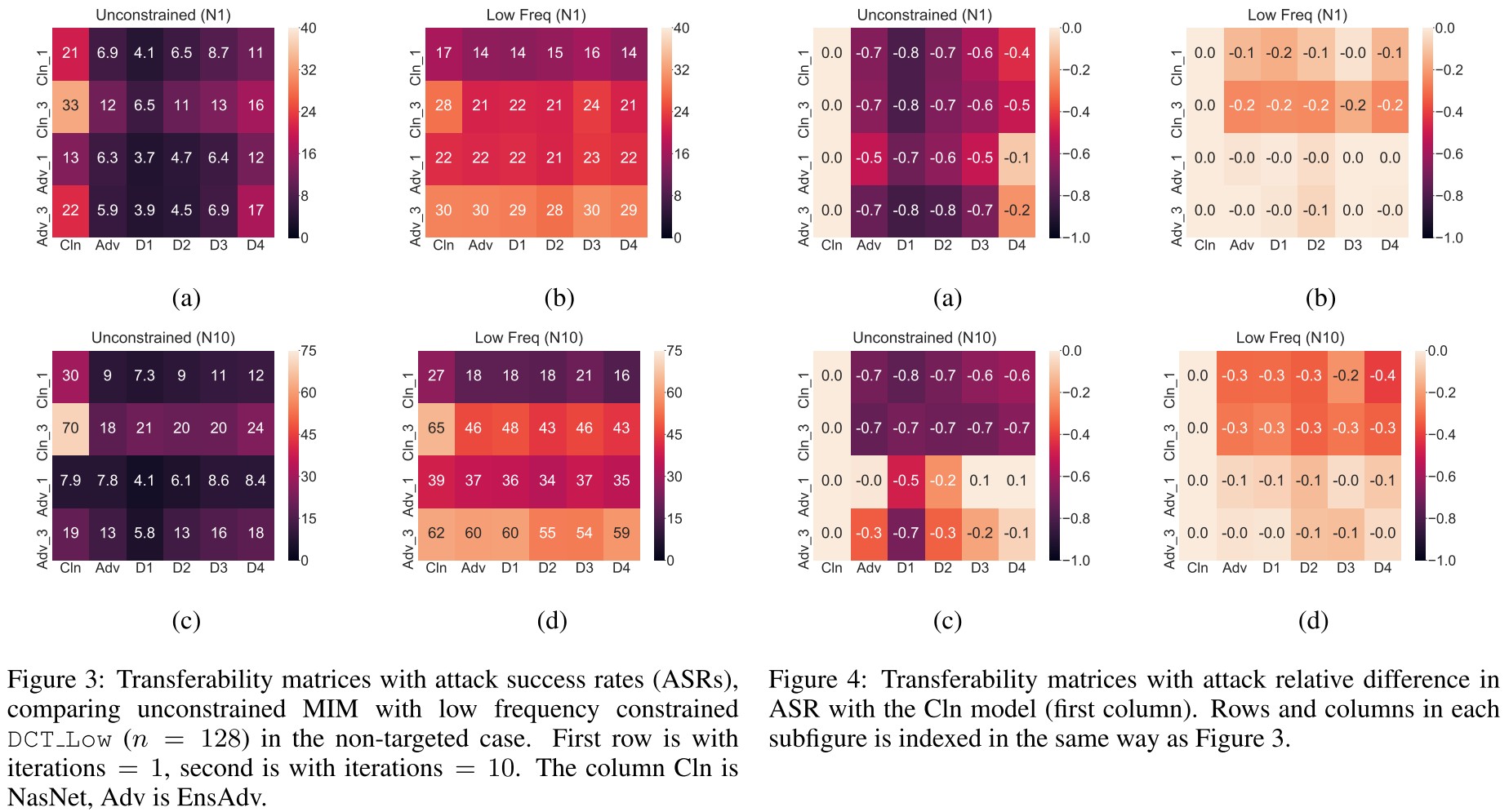

As shown in Figure 4,

Thus, as discussed, defended models are roughly as vulnerable as undefended models when encountered by low frequency perturbations.

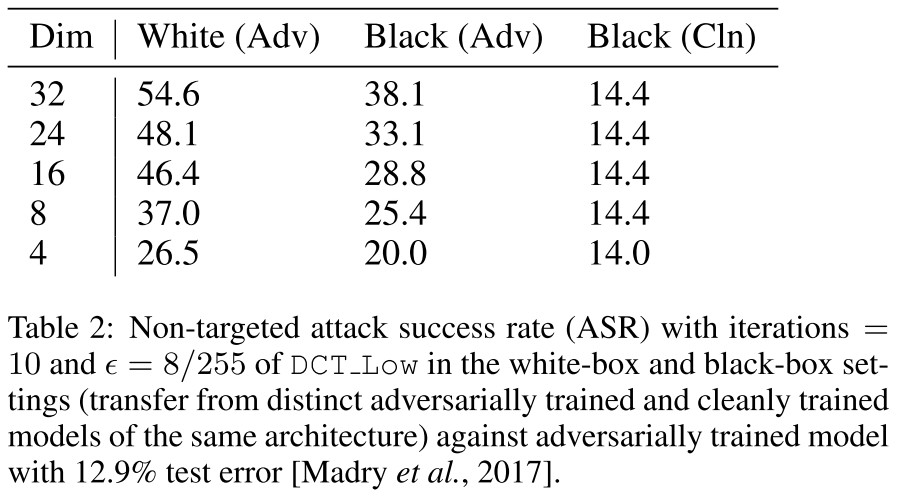

They also test on adversarially trained model for CIFAR-10.

We observe that dimensionality reduction only hurts performance.

This table is a little ambeguous, and it's also not detailed in the original paper.

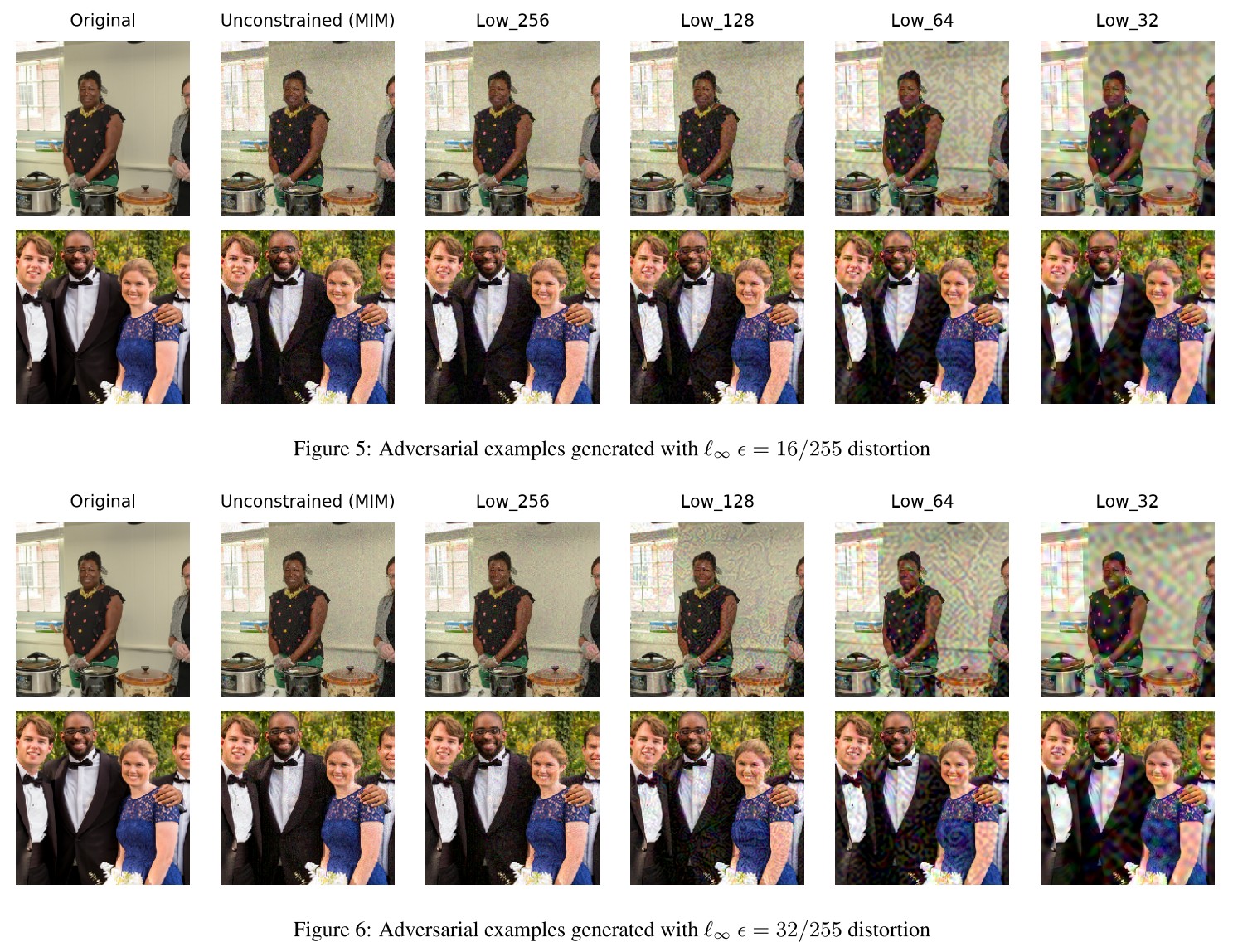

As shown in Figure 5 and Figure 6, low frequency perturbations are perceptible under -norm with , i.e. one of the competition constraints, suggesting the unreliable of -norm metrics for measuring the misalignment between human and machine perception.

Inspirations

This is an empirical work of not very much novelty, they demonstrate that low frequency perturbations do not gain its effectiveness by reduction of search space and adversarially trained models are equally sensitive to low frequency perturbations as standard models.

I think there will be some work incorporating low frequency perturbations into adversarial training.